Why Enterprise AI Fails Without Proper Data Governance: The MCP Solution

Discover why 70-80% of enterprise AI projects fail and how proper data governance frameworks with MCP can transform AI into secure, compliant business assets.

Enterprise AI initiatives fail at an alarming rate—studies indicate that 70-80% of AI projects never make it to production, and those that do often struggle with adoption, security breaches, or compliance violations. While organizations typically blame technology limitations, insufficient training data, or lack of AI expertise, the root cause runs deeper: the absence of proper data governance frameworks designed for AI systems.

Without robust data governance, AI implementations become security vulnerabilities, compliance nightmares, and operational bottlenecks that create more problems than they solve. The solution lies not in abandoning AI ambitions, but in establishing governance frameworks that enable secure, compliant, and scalable AI deployment across the enterprise.

The Hidden Crisis in Enterprise AI

Behind every failed AI initiative lies a governance failure. Organizations rush to deploy AI tools without establishing clear policies for data access, user permissions, audit trails, or compliance monitoring. The results are predictable: security incidents, regulatory violations, and AI systems that employees cannot trust or safely use.

The Shadow IT Problem

Employees frustrated with slow, limited enterprise AI implementations turn to consumer AI tools, uploading sensitive business data to external platforms without IT oversight. This shadow IT phenomenon creates uncontrolled data exposure risks while undermining official AI initiatives.

The Compliance Trap

Organizations in regulated industries discover too late that their AI implementations cannot meet audit requirements. Without proper logging, access controls, and data lineage tracking, AI systems become compliance liabilities rather than business assets.

The Security Blind Spot

Traditional security frameworks assume human users making deliberate data access decisions. AI systems that can rapidly access and process vast amounts of enterprise data require fundamentally different security approaches that most organizations have not developed.

Data Governance Fundamentals for AI

Effective AI governance requires rethinking traditional data management approaches. While human users typically access specific datasets for defined purposes, AI systems need contextual access to multiple data sources while maintaining strict security boundaries.

Identity and Access Management for AI

AI systems require sophisticated identity management that goes beyond traditional user authentication. Every AI interaction must be traceable to a specific user, with permissions that reflect that user's role, department, and current responsibilities.

Dynamic access control that adapts to changing organizational structures

Contextual permissions based on user roles and current responsibilities

Granular access control across multiple systems and data sources

🔍 Audit and Compliance Requirements

Regulatory frameworks increasingly recognize AI systems as decision-making entities that require comprehensive audit trails. Organizations must track not just what data AI systems access, but how that data influences AI responses and business decisions.

GDPR Implications:

European privacy regulations require organizations to explain automated decision-making processes and provide individuals with rights regarding AI-driven decisions.

SOX Compliance:

Financial reporting requirements extend to AI systems that influence financial decisions or have access to financial data.

Industry-Specific Regulations:

Healthcare (HIPAA), financial services (SOX, FINRA), and other regulated industries have specific requirements for AI system governance.

Data Classification and Protection

AI systems often require access to data with varying sensitivity levels within a single interaction. Effective governance frameworks classify enterprise data and establish clear policies for how AI systems can access, process, and retain information at each classification level.

Common Governance Failures and Their Consequences

The Permissive Access Trap

Many organizations start AI implementations with overly permissive data access policies, reasoning that AI systems need broad access to provide valuable insights. This approach creates several critical vulnerabilities:

- Data Exposure Risk: AI systems with broad access can inadvertently expose sensitive information through responses to seemingly innocuous queries.

- Privilege Escalation: Users may discover they can access information through AI systems that they cannot access directly, bypassing traditional security controls.

- Compliance Violations: Broad AI access often violates data minimization principles required by privacy regulations.

The Audit Trail Gap

Traditional logging systems capture direct data access but often miss AI interactions that synthesize information from multiple sources. This creates dangerous gaps in audit trails that can lead to compliance failures and security incidents.

Missing Context: Standard access logs show that an AI system accessed specific databases but not how that information was used in AI responses or business decisions.

Response Attribution: Organizations struggle to trace AI responses back to their source data, making it difficult to verify accuracy, identify bias, or respond to data subject requests under privacy regulations.

Decision Accountability: When AI systems influence business decisions, organizations must be able to demonstrate the data and logic behind those recommendations. Incomplete audit trails make this accountability impossible.

The Integration Security Problem

Each AI integration with enterprise systems creates new attack vectors and compliance risks. Organizations often focus on the AI technology itself while overlooking the security implications of connecting AI to sensitive business systems.

API Vulnerabilities

Custom integrations often lack production security controls

Credential Management

Long-lived credentials become attractive attack targets

Data Leakage

Poor design may expose more data than necessary

Industry-Specific Governance Challenges

Financial Services

Financial institutions face unique AI governance challenges due to regulatory requirements, fiduciary responsibilities, and the high-stakes nature of financial decisions.

- Regulatory Compliance: AI systems that influence trading decisions, loan approvals, or customer recommendations must comply with FINRA, SEC, and banking regulations.

- Market Abuse Prevention: AI systems with access to material non-public information must include controls that prevent insider trading or market manipulation.

- Customer Privacy: Financial AI systems must protect customer financial information while providing personalized services.

Healthcare

Healthcare organizations must balance AI innovation with patient privacy, safety requirements, and complex regulatory compliance.

- HIPAA Compliance: AI systems accessing protected health information (PHI) must include comprehensive access controls and audit trails.

- Clinical Decision Support: AI systems that influence patient care decisions must meet FDA requirements for medical devices.

- Research vs. Clinical Use: Healthcare AI often spans research and clinical applications with different governance requirements.

Manufacturing and Supply Chain

Industrial organizations face AI governance challenges related to operational technology, supply chain security, and intellectual property protection.

- OT/IT Convergence: AI systems bridging operational and information technology domains require governance frameworks addressing both cybersecurity and safety.

- Supply Chain Security: AI systems with access to supplier information must prevent intellectual property theft and supply chain attacks.

- Quality and Safety: AI systems influencing manufacturing processes must maintain audit trails supporting safety certifications.

Building Effective AI Governance

Governance Framework Development

Successful AI governance begins with clear policies that address the unique characteristics of AI systems while building on existing enterprise governance foundations.

- AI-Specific Policies: Governance policies specifically designed for AI systems, covering data access patterns, decision-making processes, and human oversight requirements.

- Risk Assessment: Risk assessment processes that evaluate potential data exposure, compliance violations, and operational risks before AI deployment.

- Stakeholder Engagement: Collaboration between IT, security, compliance, legal, and business teams to ensure policies address all relevant concerns.

✅ Implementation Best Practices

Phased Deployment:

Organizations should implement AI governance controls in phases, starting with low-risk use cases and expanding to more sensitive applications as governance maturity increases.

Continuous Monitoring:

AI governance requires ongoing monitoring of system behavior, access patterns, and compliance metrics rather than one-time assessments.

Regular Audits:

Organizations should conduct regular audits of AI system governance controls, including access permissions, audit log completeness, and compliance with established policies.

Change Management and Training

User Education

Training on proper AI system usage, data handling requirements, and governance compliance responsibilities

Administrator Training

Specialized training on AI governance tools, monitoring techniques, and incident response procedures

Executive Awareness

Leadership understanding of AI governance risks, compliance requirements, and business impact of governance failures

The Path Forward

Enterprise AI success requires treating data governance as a foundational requirement rather than an operational afterthought. Organizations that establish robust governance frameworks before widespread AI deployment position themselves for sustainable AI transformation while avoiding the security, compliance, and operational risks that derail many AI initiatives.

The choice is clear: implement proper AI governance from the beginning, or face the inevitable consequences of ungoverned AI systems that create more problems than they solve.

Why Palma.ai Delivers Enterprise AI Governance

While understanding AI governance requirements is essential, implementing effective governance at enterprise scale requires sophisticated infrastructure that most organizations cannot build internally. Palma.ai provides the comprehensive governance platform that transforms AI governance theory into operational reality.

Enterprise-Grade RBAC Implementation

Palma.ai's platform provides the sophisticated role-based access control that enterprise AI requires. Unlike basic permission systems, Palma.ai enables granular, contextual access control that adapts to user roles, current responsibilities, and organizational changes. Sales teams access their specific customer data and opportunities, while finance teams connect to appropriate financial systems and reports.

Comprehensive Audit and Compliance Capabilities

Palma.ai captures complete audit trails of every AI interaction with enterprise systems, providing the detailed logging that compliance frameworks require. Organizations can demonstrate exactly what data AI systems accessed, how that information influenced AI responses, and which users were involved in each interaction.

Zero Trust Security Architecture

Palma.ai implements zero trust principles specifically designed for AI systems. Every AI interaction with enterprise data requires explicit authentication and authorization. No AI assistant receives blanket access to enterprise systems—instead, permissions are dynamically evaluated based on current user context, session security, and data classification levels.

Centralized Governance Control

Rather than managing governance across dozens of separate AI integrations, Palma.ai provides centralized governance control through its MCP gateway platform. AI teams can establish enterprise-wide AI policies, monitor compliance across all AI deployments, and identify incidents from a single management interface.

Scalable Governance Operations

Palma.ai's multi-tenant architecture supports thousands of users across different departments and business units while maintaining consistent governance controls. Each team gets AI assistants tailored to their specific needs and data access requirements, but all operate within the same enterprise governance framework.

The reality of enterprise AI governance is that success requires more than good intentions and policy documents—it requires purpose-built infrastructure that makes governance controls practical and scalable.

Palma.ai provides that infrastructure, enabling organizations to deploy powerful AI assistants while maintaining the security, compliance, and control that enterprise operations demand.

Organizations serious about AI transformation cannot afford to treat governance as an afterthought. With Palma.ai, proper AI governance becomes the foundation for sustainable AI success rather than a barrier to innovation.

Read More

How to Give 500 Developers MCP Access Without Losing Control

The enterprise governance playbook for rolling out AI agent MCP connections at scale. Access control, data boundaries, approval workflows, audit trails, and cost tracking — everything you need to unlock MCPs without security, compliance, or budget surprises.

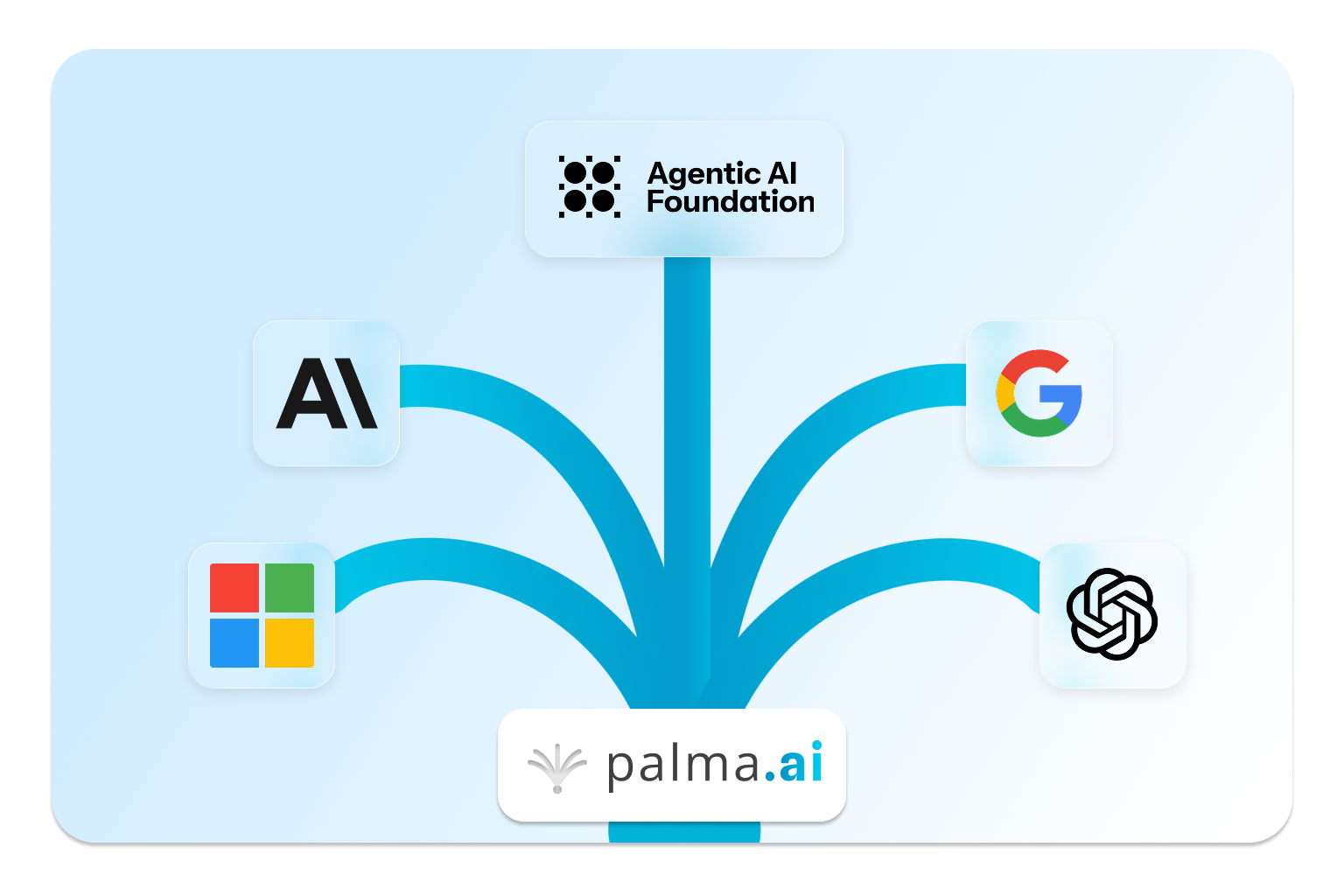

Palma.ai Joins OpenAI, Anthropic & Microsoft as AAIF Member

Palma.ai is proud to join the Agentic Artificial Intelligence Foundation (AAIF) as a Silver-tier member alongside founding members Anthropic, OpenAI, Google, AWS, and Microsoft to shape the future of AI agent standards.

Ready to Future-proof your AI Strategy?

Transform your business with secure, controlled AI integration

Connect your enterprise systems to AI assistants while maintaining complete control over data access and user permissions.