The New Protocol Era: How MCP and Agent2Agent Are Following the Kubernetes Playbook

Google's donation of Agent2Agent to the Linux Foundation signals standardized AI agent communication. Discover how MCP and A2A protocols are creating the Kubernetes moment for enterprise AI.

The enterprise AI landscape is experiencing a defining moment reminiscent of the container orchestration revolution a decade ago. Just as Google donated the Agent2Agent (A2A) protocol project to the Linux Foundation, we're witnessing the emergence of standardized protocols that will fundamentally reshape how AI systems communicate and collaborate. This mirrors the transformative journey of Kubernetes, which Google donated to the Cloud Native Computing Foundation in 2015, ultimately becoming the de facto standard for container orchestration.

The parallels between today's AI protocol landscape and the container orchestration evolution of the 2010s are striking – and the implications for enterprise architecture are profound.

The Kubernetes Precedent: From Proprietary to Open Standard

In 2014, the container orchestration landscape was fragmented. Docker Swarm, Apache Mesos, and various proprietary solutions competed for dominance, each with incompatible APIs and deployment models. Organizations faced vendor lock-in, limited interoperability, and the complexity of managing multiple orchestration platforms.

Google's decision to open-source Kubernetes and donate it to the Linux Foundation's Cloud Native Computing Foundation changed everything. By establishing a vendor-neutral, community-driven standard, Kubernetes eliminated fragmentation and enabled the explosive growth of cloud-native computing. Today, Kubernetes orchestrates workloads across virtually every major cloud platform and enterprise data center.

The key lesson: open standards, backed by major technology companies and governed by neutral foundations, create the foundation for entire technology ecosystems to flourish.

The AI Protocol Parallel: MCP and Agent2Agent

Today's AI agent landscape mirrors the pre-Kubernetes container world. Organizations deploy AI assistants from multiple vendors, each with proprietary integration methods and communication protocols. Sales teams use one AI platform, support teams another, and finance teams a third – all operating in isolation with limited ability to share context or collaborate.

Enter two complementary protocols that promise to solve this fragmentation:

Model Context Protocol (MCP): The Data Integration Standard

Developed by Anthropic, MCP addresses how AI applications securely connect with external data sources and business systems. MCP provides a standardized way for AI assistants to access enterprise databases, APIs, and applications while maintaining security and governance controls.

Think of MCP as the "data connectivity layer" – it solves the challenge of giving AI assistants contextual awareness of business environments without requiring custom integrations for each use case.

Agent2Agent (A2A): The Inter-Agent Communication Standard

A2A is an open protocol that provides a standard way for agents to collaborate with each other, regardless of the underlying framework or vendor. The Agent2Agent (A2A) protocol addresses a critical challenge in the AI landscape: enabling gen AI agents, built on diverse frameworks by different companies running on separate servers, to communicate and collaborate effectively - as agents, not just as tools.

Where MCP connects AI to data, A2A connects AI to other AI. A2A follows a client-server model where agents communicate over standard web protocols like HTTP using structured JSON messages. This enables sophisticated multi-agent workflows where specialized AI assistants can delegate tasks, share context, and coordinate complex business processes.

The Complementary Architecture: MCP + A2A = Complete AI Ecosystem

MCP and A2A are not competing standards – they're complementary protocols that together create a complete architecture for enterprise AI:

MCP provides the "downward" integration – connecting AI agents to enterprise data sources, APIs, and business systems. This gives individual agents the contextual knowledge they need to be effective.

A2A provides the "lateral" integration – enabling AI agents to communicate with each other, coordinate activities, and create sophisticated multi-agent workflows.

Enterprise Use Case Example

Consider a customer support scenario: When a complex technical issue arises, the support AI (connected to knowledge bases and ticketing systems via MCP) can communicate with a specialized engineering AI (connected to code repositories and monitoring systems via MCP) through A2A protocols. The engineering AI can analyze the issue, suggest solutions, and even coordinate with a sales AI to understand customer impact – all through standardized protocols.

This complementary architecture enables what we call "AI orchestration" – coordinated workflows involving multiple specialized AI agents, each with appropriate data access, working together to solve complex business challenges.

Why Google's A2A Donation Matters: The Linux Foundation Effect

The Linux Foundation's adoption of the Agent2Agent (A2A) protocol project signals the beginning of standardization in the AI agent ecosystem. Just as Kubernetes benefited from neutral governance and broad industry collaboration, A2A's placement under the Linux Foundation provides:

- Vendor Neutrality: No single company controls the protocol's evolution, encouraging broad adoption across the industry

- Community Development: Open governance enables rapid innovation and ensures the protocol evolves to meet real-world enterprise needs

- Enterprise Confidence: Linux Foundation backing provides the stability and longevity guarantees that enterprise architects require for strategic technology decisions

- Ecosystem Growth: Neutral governance encourages tool vendors, system integrators, and platform providers to build A2A-compatible solutions

The Coming Standardization Wave

The donation of A2A to the Linux Foundation, combined with the growing adoption of MCP, suggests we're entering a period of rapid standardization in enterprise AI architecture. Microsoft's announcement of support for the open A2A protocol demonstrates the cross-industry momentum building behind these standards.

This standardization will drive several key developments:

- Interoperability by Design: New AI applications will be built with MCP and A2A compatibility from the ground up, enabling seamless integration with existing enterprise AI deployments

- Specialized Agent Ecosystems: As communication protocols standardize, we'll see the emergence of highly specialized AI agents optimized for specific business functions – financial analysis, customer support, software development, legal review – that can collaborate through A2A protocols

- Multi-Vendor Strategies: Organizations will be able to select best-of-breed AI solutions for different use cases while maintaining interoperability through standard protocols

The Enterprise Management Challenge: Why Organizations Need AI Protocol Gateways

However, standardization brings new challenges. Just as Kubernetes created the need for sophisticated cluster management, monitoring, and security tools, MCP and A2A protocols will require enterprise-grade management infrastructure.

Organizations deploying multiple AI agents with various MCP connections and A2A communications will face:

- Security and Governance: Every MCP connection and A2A interaction must be secured, monitored, and governed according to enterprise policies and regulatory requirements

- Performance and Reliability: AI agent communications must be fast, reliable, and scalable to support business-critical workflows

- Multi-Tenant Complexity: Different departments and user groups need access to different AI agents and capabilities while maintaining appropriate isolation and access controls

- Protocol Lifecycle Management: As MCP servers and A2A endpoints evolve, organizations need centralized management of versions, configurations, and deployments

- Analytics and Optimization: Understanding how AI agents communicate, collaborate, and consume resources requires sophisticated monitoring and analytics capabilities

The palma.ai Solution: Enterprise AI Protocol Gateway

This is where palma.ai becomes essential. Just as organizations needed sophisticated Kubernetes management platforms to realize the full potential of container orchestration, they need comprehensive AI protocol gateways to manage MCP and A2A implementations at enterprise scale.

palma.ai provides the missing management layer for standardized AI protocols:

The Strategic Imperative: Preparing for the AI Protocol Era

The convergence of MCP and A2A protocols, backed by major technology companies and neutral governance, represents a fundamental shift in enterprise AI architecture. Organizations that prepare for this standardized future will gain significant competitive advantages:

- Vendor Flexibility: The ability to select and integrate best-of-breed AI solutions without vendor lock-in

- Scalable Complexity: Support for sophisticated multi-agent workflows that can scale across entire enterprises

- Future-Proof Architecture: Infrastructure that can evolve with emerging AI capabilities while maintaining interoperability

- Accelerated Innovation: Reduced integration overhead enables faster deployment of new AI capabilities and use cases

Conclusion: The Infrastructure Layer for AI's Future

Just as Kubernetes became the invisible infrastructure layer that enabled the cloud-native revolution, MCP and A2A protocols are becoming the invisible infrastructure that will enable the multi-agent AI revolution. Google's donation of A2A to the Linux Foundation marks the beginning of this standardization journey.

However, like any powerful infrastructure technology, these protocols require sophisticated management, security, and governance capabilities to realize their full enterprise potential. Organizations that invest in comprehensive AI protocol gateway solutions like palma.ai today will be best positioned to leverage the transformative capabilities of standardized, interoperable AI agent ecosystems.

The question isn't whether your organization will adopt MCP and A2A protocols – it's whether you'll have the infrastructure management capabilities to use them effectively, securely, and at scale. The AI protocol era is here, and the organizations that prepare now will define the next decade of enterprise AI innovation.

Read More

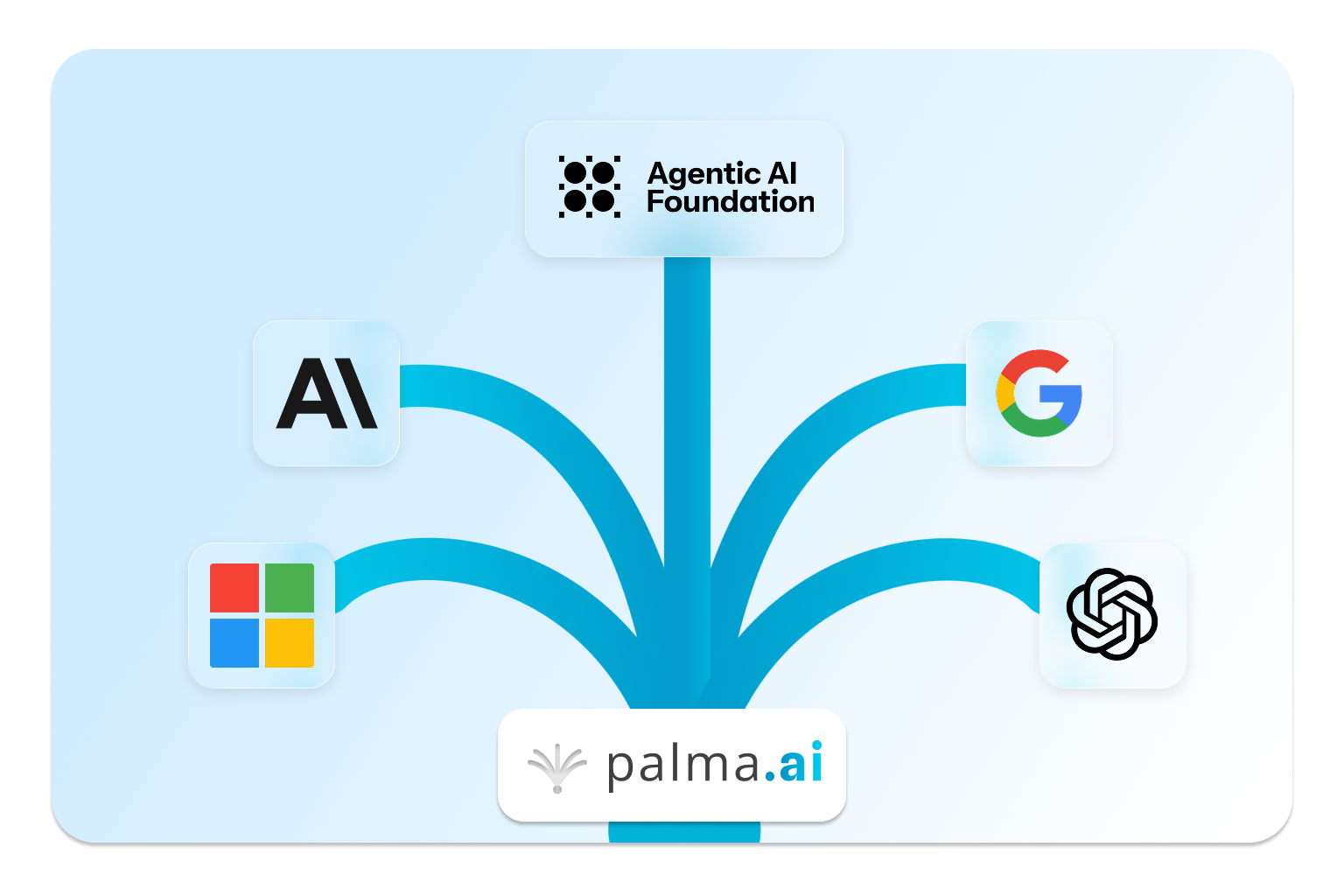

Palma.ai Joins OpenAI, Anthropic & Microsoft as AAIF Member

Palma.ai is proud to join the Agentic Artificial Intelligence Foundation (AAIF) as a Silver-tier member alongside founding members Anthropic, OpenAI, Google, AWS, and Microsoft to shape the future of AI agent standards.

Why Google's Agent-to-Agent Gift Feels Like Kubernetes All Over Again

Google's donation of the A2A protocol to the Linux Foundation mirrors what happened in 2015 with kubernetes. Patrick Gruhn was part of that wave with his previous company (exit to cisco), Replex.io, and now part of the next wave with palma.ai. This analysis explores how standardized AI protocol, will reshape enterprise AI infrastructure—and why having the right management layer matters now.

Ready to Future-proof your AI Strategy?

Transform your business with secure, controlled AI integration

Connect your enterprise systems to AI assistants while maintaining complete control over data access and user permissions.